The Hidden Cost of 'AI Saviors': Why Ravender Pal Singh's Math Background Exposes Silicon Valley's Shallow Hype Cycle

The journey from pure mathematics to AI innovation isn't just career progression; it's a warning about AI safety. Unpacking the real stakes.

Key Takeaways

- •The industry's reliance on empirical success over theoretical proof is unsustainable for critical applications.

- •The return to mathematical foundations signals a maturing, albeit forced, phase of AI development.

- •Regulatory scrutiny will intensify if verifiable safety proofs are not established quickly.

- •Future AI leaders will be those who can guarantee stability through rigorous mathematics, not just novelty.

The Hook: Are We Building Gods or Golems on Shaky Math?

Every week, a new tech visionary emerges, promising salvation through Artificial Intelligence innovations. But when you peel back the venture capital gloss, what foundation remains? The narrative around figures like Ravender Pal Singh, transitioning from rigorous mathematical models to applied AI, offers a rare, unsanitized look behind the curtain. This isn't just a success story; it’s a critical commentary on how modern technology trends are often built on shaky theoretical ground, prioritizing speed over stability. The real story isn't the innovation; it's the implicit admission that the current AI landscape desperately needs foundational rigor.

The 'Meat': From Abstract Theory to Urgent Reality

Singh's trajectory—from the abstract purity of mathematical modeling to the chaotic deployment of real-world AI systems—highlights a dangerous disconnect in the industry. Too many current AI safety protocols are layer-on patches applied to models whose core behaviors are only superficially understood. Mathematical modeling, the discipline he mastered, demands proof, structure, and demonstrable limits. Modern deep learning often thrives on correlation without causation, a mathematical sleight of hand that works until it doesn't.

The unspoken truth here is that the industry is desperately trying to recruit the very minds—the theoreticians, the pure mathematicians—that the hyper-agile development cycle previously dismissed as too slow. We are witnessing a frantic rush to inject sanity checks into systems that were designed for maximum velocity. Think of it: if the foundational mathematics underpinning the large language models (LLMs) were truly robust for high-stakes applications, we wouldn't need such a public pivot toward "safety engineering" post-deployment.

The 'Why It Matters': The Great Unbundling of Hype and Substance

Who truly wins when this transition happens? The VCs who bet on the initial hype lose some face, but the real winners are the engineers who can bridge the gap between theoretical proof and practical application. They become the ultimate gatekeepers. Conversely, the losers are the end-users and critical infrastructure sectors (like autonomous systems or finance) that have been operating on faith. We have outsourced complex decision-making to black boxes that lack the necessary traceability required by true engineering disciplines. This isn't just about better algorithms; it’s about regulatory capture avoidance. If industry leaders can't prove their systems won't fail catastrophically, governments will step in—and that regulatory framework will stifle innovation far more than any internal mathematical review ever could. For a deeper look at the challenge of AI regulation, see the ongoing discussions surrounding the EU's AI Act.

Where Do We Go From Here? The Prediction

The next 18 months will not be defined by a breakthrough in generative capability, but by a **'Mathematical Reckoning'** in AI. We will see high-profile, non-fatal but economically devastating failures traced directly back to insufficient theoretical modeling—perhaps a massive financial anomaly or a critical infrastructure glitch. This event will force a hard pivot. Companies will stop hiring for "prompt engineers" and start aggressively recruiting applied mathematicians and control theorists. The contrarian prediction is this: the next generation of dominant AI platforms will not be the most creative, but the most verifiable. Reliability, rooted in proven mathematical frameworks, will become the ultimate competitive advantage over raw, untamed intelligence. This echoes historical shifts in engineering, much like the move toward formalized standards in early aviation.

Key Takeaways (TL;DR)

- The pivot to foundational rigor (math models) signals that current AI innovation is outpacing its theoretical safety net.

- The real power brokers in AI are shifting from pure coders to mathematicians who can enforce verifiable limits.

- We are heading for a "Mathematical Reckoning" triggered by a significant, non-fatal failure exposing systemic weakness.

- Future market dominance will favor verifiable, safe AI over merely powerful, unpredictable AI.

Gallery

Frequently Asked Questions

What is the primary benefit of integrating mathematical models into modern AI?

The primary benefit is moving AI development from statistical correlation to causal understanding, providing necessary guarantees for safety, predictability, and formal verification, which current deep learning often lacks.

Why are industry leaders suddenly emphasizing AI safety and foundational science?

They are emphasizing it because the scale and autonomy of deployed AI systems are now reaching a point where unexpected failures carry catastrophic economic or physical risk, forcing a reactive pivot toward robustness.

What is the 'Mathematical Reckoning' predicted for the AI industry?

It is the predicted future event where a significant, high-cost failure directly attributable to a lack of foundational mathematical proof forces a systemic industry shift towards hiring theoreticians and adopting rigorous, verifiable engineering standards.

How does Ravender Pal Singh's background relate to current AI challenges?

His background represents the necessary bridge: translating the hard, verifiable logic of pure mathematical modeling into the fast-moving, often heuristic-driven world of applied artificial intelligence innovations, addressing the industry's current deficit in rigor.

Related News

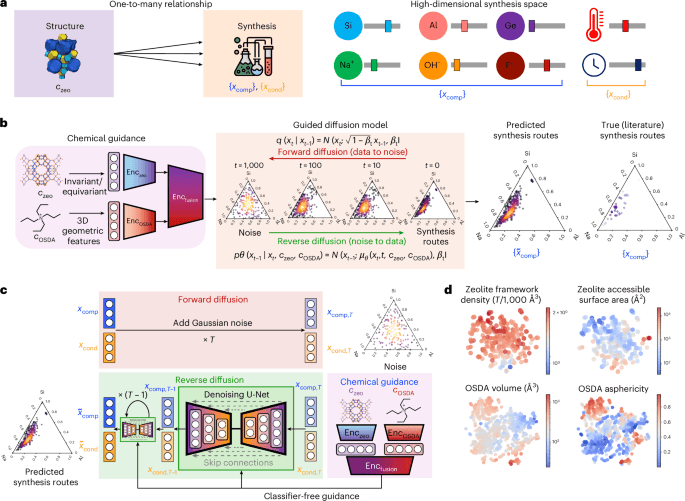

The AI Alchemy Revolution: Why DiffSyn Isn't Just New Science, It's a Threat to Traditional Chemistry Careers

Generative AI like DiffSyn is fundamentally reshaping materials science. Discover the unspoken winners and losers in this new era of chemical discovery.

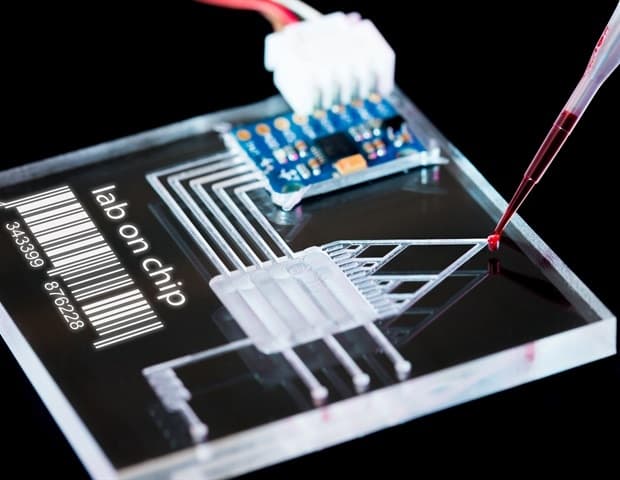

The Hidden Cost of Lab-Grown Organs: Why Simplified Microfluidics Will Bankrupt Traditional Biotech

Digital microfluidic technology is changing 3D cell culture, but the real story is the centralization of pharmaceutical power it enables.

The Silent Coup: Why Hillcrest's 'Success' with a Tier 1 Supplier Hides a Brutal Automotive Reality

Hillcrest's recent tech evaluation victory is less about innovation and more about the ruthless consolidation happening in automotive technology supply chains. Unpacking the hidden costs.