The Hidden War: Why AI Image Filters Are a Crumbling Defense Against Deepfake Porn

The battle against malicious AI-generated sexualised images is being lost in plain sight. Technology's proposed fixes are theater.

Key Takeaways

- •The focus on technological detection is a doomed, reactive strategy against constantly evolving AI.

- •Open-source models bypass safety guardrails, making them the primary vector for malicious AI image creation.

- •The true long-term solution involves shifting from proving what is fake to mandating cryptographic proof of origin for all digital media.

- •This shift will necessitate a fundamental re-evaluation of online anonymity.

The Unspoken Truth: Why Watermarks Won't Save Us From AI Abuse

The internet is drowning in a flood of synthesized reality, and the current technological response to **AI-generated sexualised images** feels less like a solution and more like a PR exercise. We are constantly fed the narrative that the same technology that creates the problem—Generative AI—will somehow police itself. This premise is fundamentally flawed. The discourse around stopping malicious synthetic media often focuses on technical fixes like digital watermarking or detection algorithms. But this misses the crucial, unspoken truth: **The war against deepfake abuse is not a technological arms race; it is a battle of incentives.**

Who truly benefits from maintaining the status quo? The platform providers and the model creators benefit from the massive engagement these sensational, often illicit, images generate. Detection tools are inherently reactive and perpetually one step behind the generative models. Every time a detection method is deployed, the adversarial AI developers simply tweak their training data or introduce new noise patterns to bypass it. This cycle guarantees failure for the defenders. Focusing solely on detection is like trying to bail out the Titanic with a teaspoon while ignoring the iceberg reports. We need to analyze the **AI ethics** landscape through a lens of power, not just code.

The Economic Incentive: Why Open Source is the Wild West

The core issue driving the proliferation of non-consensual synthetic imagery is the democratization of powerful tools. While major labs like OpenAI or Google might implement guardrails, the open-source community—driven by academic freedom, curiosity, and sometimes malice—releases models that bypass these limitations entirely. These raw, ungoverned models become the engine for abuse. Current legislative efforts lag far behind, focusing on punishing the *user* rather than regulating the *distribution* of these highly effective, easily accessible tools.

The economic reality is that building robust, universally effective detection for **synthetic media detection** is prohibitively expensive and often proprietary. If a private company develops a superior detector, they have little incentive to share it freely, especially if it slows down the very content creation that drives their traffic metrics. This creates a systemic vulnerability where the most potent tools for harm are widely available, and the tools for defense remain fragmented and proprietary. This asymmetry is key to understanding why this problem persists despite global outcry.

What Happens Next? The Era of Identity Verification

Prediction demands a shift away from content analysis toward identity verification. The technological future that will truly curb this abuse won't be better detectors; it will be mandatory, cryptographic proof of origin for *all* digital content, particularly photographs and videos. This is the contrarian pivot: Stop trying to prove what is fake, and start mandating that everything real must be verifiably authenticated at the point of capture.

We are heading toward a world where unverified media—content without a verifiable chain of custody linked to a known, regulated hardware device (like a smartphone camera)—will be automatically treated with extreme suspicion, perhaps even blocked by major platforms. This will be deeply unpopular, infringing on digital privacy and anonymity, but it is the only logical endpoint when the cost of creating undetectable, harmful fake content trends toward zero. The debate will pivot from 'How do we stop deepfakes?' to 'Do we have the right to post anonymous, unauthenticated media?' Expect major legislative pushes in the next three years demanding hardware-level content signing protocols. For more on the legal challenges, see reports from the Electronic Frontier Foundation (EFF) regarding digital rights.

Key Takeaways (TL;DR)

- Technological fixes like watermarking are failing because they are always reactive to adversarial AI evolution.

- The primary driver of abuse is the open-source release of powerful, ungoverned generative models.

- The real solution will involve mandatory, hardware-level content authentication, not just better detection software.

- The future battleground is digital identity and the right to anonymous posting online.

Gallery

Frequently Asked Questions

What is the biggest flaw in relying on AI detection tools for deepfakes?

The biggest flaw is the adversarial nature of the problem. Detection tools are always playing catch-up; as soon as a detector is released, creators modify their generative models to bypass it, creating an endless and costly arms race that favors the creators of the abuse.

Why are open-source AI models causing more problems than commercial ones?

Commercial models, while not perfect, usually have safety guardrails implemented by the developing labs. Open-source models are often released without these filters, allowing bad actors to fine-tune them specifically for creating prohibited content, such as AI-generated sexualised images, with no accountability.

What is meant by 'cryptographic proof of origin' for media?

It means that digital content (photos, videos) would be cryptographically signed by the hardware device (like a camera or phone) at the moment of capture, creating an immutable, verifiable record of where, when, and by what device the content was created. This makes unauthenticated content inherently untrustworthy.

Related News

The Great Deception: Why 'Humanizing' Tech in Education Is a Trojan Horse for Data Mining

The push to keep education 'human' while integrating radical technology hides a darker truth about data control.

The AI Deepfake Lie: Why Tech Solutions Will Never Stop Sexualized Image Generation

The fight against AI-generated sexualized images is a technological dead end. Discover the hidden winners and why detection is a losing game.

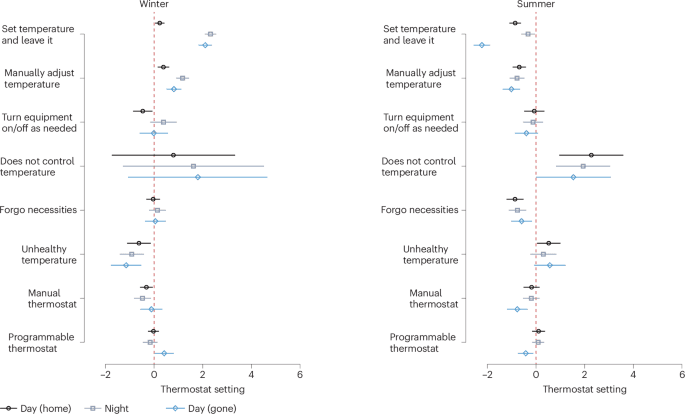

The Climate Lie: Why Your Smart Thermostat Is A Trojan Horse for Energy Control

Forget high-tech fixes. New data reveals US indoor temperature control is a behavioral battlefield, not a technological one. Who profits from this illusion?

DailyWorld Editorial

AI-Assisted, Human-Reviewed

Reviewed By

DailyWorld Editorial