The Great Deception: Why 'Humanizing' Tech in Education Is a Trojan Horse for Data Mining

The push to keep education 'human' while integrating radical technology hides a darker truth about data control.

Key Takeaways

- •The push for 'humanizing' tech masks a massive effort to normalize granular student data collection.

- •EdTech conglomerates are the primary beneficiaries, gaining unique behavioral datasets for long-term profiling.

- •Over-reliance on adaptive platforms risks standardizing thought and penalizing non-standard learners.

- •A future 'Digital Detox Curriculum' will likely become the new status symbol for the elite.

The Hook: Are We Trading Empathy for Efficiency?

The current narrative flooding educational discourse is deceptively soothing: Technology is transforming education, but don't worry, we are diligently working to keep the process 'human.' This soft rhetoric is the most dangerous element of the entire digital overhaul. The real story isn't about preserving the human touch; it's about the unprecedented, granular data harvesting being normalized under the guise of personalized learning. We must dissect this trend, focusing on the true winners in the race for educational technology supremacy.

The 'Unspoken Truth': Standardization Under the Guise of Customization

When proponents speak of 'staying human,' they mean maintaining the *illusion* of teacher-student interaction. The reality is that every adaptive learning platform, every AI tutor, and every gamified assessment is fundamentally designed to standardize the learning path, not celebrate deviation. The winner here is not the student, but the massive EdTech conglomerates. They gain access to behavioral datasets that map cognitive bottlenecks, attention spans, and emotional responses to specific stimuli—data far more valuable than standardized test scores. This isn't about pedagogy; it's about building predictive models of future consumers and employees. The human element is merely the palatable interface for the data extraction engine.

The real losers are the educators, whose professional judgment is increasingly outsourced to algorithms they cannot audit. Their role shifts from mentor to data facilitator. This centralization of learning metrics poses a significant threat to intellectual diversity, subtly penalizing the non-standard thinker who doesn't fit the platform's optimized trajectory. The focus on future of learning often conveniently ignores the erosion of critical, unstructured thinking.

Deep Analysis: The Economics of Attention and Control

Why is this happening now? Because the attention economy has matured. Schools represent a captive, lifelong audience. By embedding these tools early, these companies secure a decade or more of behavioral data acquisition per user. Compare this to the murky ethics of social media data collection; in education, the data is collected under the banner of public good and academic necessity, granting unparalleled access. For deeper context on how technology structures behavior, one only needs to examine the established patterns in digital surveillance, as detailed by institutions like the Electronic Frontier Foundation.

The investment in educational technology isn't driven by a sudden philosophical shift toward better teaching; it’s driven by venture capital seeking defensible, scalable monopolies on cognitive development. The integration is irreversible once the infrastructure is laid and teachers are retrained on the new 'human-centric' dashboards. The very definition of 'human' learning is being silently redefined by proprietary code.

What Happens Next? The Great Digital Divide 2.0

My prediction is that within five years, the most elite, well-funded private institutions will deliberately pivot *away* from heavily algorithm-driven models, marketing a 'Digital Detox Curriculum' as the ultimate luxury good. They will preach the value of slow, analog learning, human mentorship, and deep reading—the very skills the mass-market public system is actively de-prioritizing in favor of scalable metrics. This creates a two-tiered system: the efficient, algorithmically managed majority, and the slow, 'truly human' elite. The gap won't be in access to devices, but in access to genuine, unmonitored cognitive development.

The attempt to 'stay human' is a necessary PR maneuver to ensure widespread adoption of a system fundamentally designed for efficiency and control. The fight for the future of learning is not about retaining the teacher's smile; it's about retaining ownership of the student's mind.

Gallery

Frequently Asked Questions

What is the main danger of 'personalized learning' algorithms?

The main danger is that algorithms optimize for measurable outcomes, potentially stifling creativity, critical thinking, and intellectual exploration that fall outside the programmed success metrics.

Who stands to gain the most from the current transformation in educational technology?

The primary beneficiaries are the large EdTech providers who monetize the aggregated student performance and behavioral data, not necessarily the individual schools or students.

How can educators resist the complete outsourcing of pedagogy to technology?

Educators must demand transparency and auditability in the algorithms used, advocate for blended models that prioritize unstructured discussion, and maintain control over data governance policies.

Is technology integration in schools inevitable?

Integration is inevitable, but the *degree* and *nature* of that integration are not. The debate must shift from 'if' to 'how' to ensure human oversight remains paramount.

Related News

The AI Deepfake Lie: Why Tech Solutions Will Never Stop Sexualized Image Generation

The fight against AI-generated sexualized images is a technological dead end. Discover the hidden winners and why detection is a losing game.

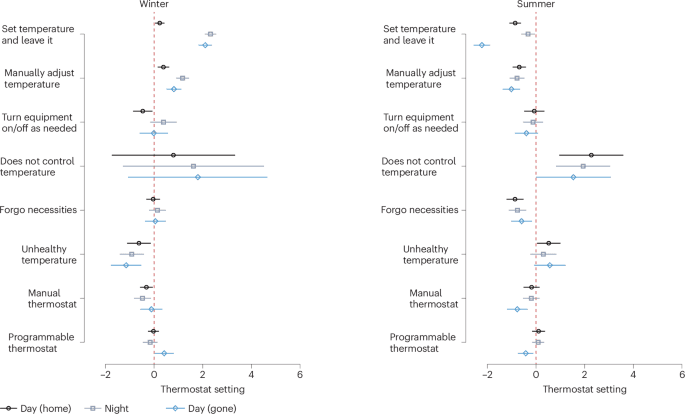

The Climate Lie: Why Your Smart Thermostat Is A Trojan Horse for Energy Control

Forget high-tech fixes. New data reveals US indoor temperature control is a behavioral battlefield, not a technological one. Who profits from this illusion?

The Hidden War: Why AI Image Filters Are a Crumbling Defense Against Deepfake Porn

The battle against malicious AI-generated sexualised images is being lost in plain sight. Technology's proposed fixes are theater.

DailyWorld Editorial

AI-Assisted, Human-Reviewed

Reviewed By

DailyWorld Editorial