The AI Grading Trap: Why Deep Learning Essay Scorer Will Kill Critical Thinking

The new deep learning essay scorer promises efficiency, but the real cost to education and critical thinking is being ignored.

Key Takeaways

- •The AI essay scorer rewards conformity and penalizes true intellectual risk-taking.

- •The underlying training data dictates what the AI considers 'good' writing, often favoring formula over substance.

- •This technology prioritizes administrative efficiency over the development of critical thinking skills.

- •Future students will learn to 'game' the algorithm rather than mastering persuasive communication.

The Hook: The Silent Death of Nuance in Education

We are told the future of education is efficiency. A recent development involving a new Automated English Essay Scoring System, leveraging deep learning and the Internet of Things (IoT), is being hailed as a breakthrough. But let’s cut through the Silicon Valley gloss. This isn't about better teaching; it’s about scalable, cheap standardization. The real story here isn't the deep learning innovation; it's what gets lost when we outsource judgment to an algorithm.

The core functionality is simple: feed the machine an essay, get a grade. This technology, often touted under the banner of Artificial Intelligence in Education, aims to solve the massive workload faced by educators. But who truly wins when the grader is blind to sarcasm, context, or genuine lateral thought? The administrators win, obsessed with metrics and quantifiable outcomes. The testing companies win, selling the software. The students? They learn to write for the machine, not for humans.

The Unspoken Truth: Writing to the Algorithm’s Bias

Every machine learning model is a mirror reflecting its training data. If the training set consists primarily of formulaic, standardized test responses—essays that prioritize specific keyword density, rigid structural adherence, and predictable argumentation—then the AI rewards mimicry. It doesn't reward genius; it rewards conformity. This creates a perverse incentive structure where students actively suppress creativity to hit the machine’s narrow parameters. We are optimizing for mediocrity at scale.

The integration of IoT technology suggests these systems might even monitor student engagement or the environment during testing. This surveillance layer, often hidden behind the efficiency argument, introduces a level of control over the learning environment that should concern anyone valuing intellectual freedom. The supposed benefit of faster feedback is dwarfed by the long-term erosion of complex communication skills.

Why This Matters: The Commodification of Thought

The move toward automated essay scoring is a key marker in the broader commodification of intellectual output. When writing—the fundamental tool for philosophical discourse and complex problem-solving—is reduced to quantifiable features recognizable by a neural network, we signal that complexity is unnecessary overhead. This trend directly undermines the liberal arts mission, reducing persuasive writing to mere pattern matching. Look at how similar systems have already impacted admissions processes; this is the next frontier.

We must ask: What happens when the next generation of leaders, journalists, and scientists are trained to please an algorithm rather than persuade a skeptical audience? The robustness of democratic debate relies on the ability to articulate nuanced, well-supported arguments that defy simple classification. This technology actively trains against that necessity. It’s a systemic simplification of human expression.

What Happens Next? The Rise of 'Prompt Engineering' for Essays

My prediction is clear: within five years, high-stakes writing instruction will shift entirely. Instead of teaching students how to structure a compelling argument, educators—under pressure to show high standardized scores—will teach 'prompt engineering' for AI graders. Students will learn the precise linguistic triggers and structural blueprints that guarantee an A from the machine, regardless of the actual substance. Universities will start demanding portfolios that show human grading alongside AI scores to prove authenticity. The arms race between AI graders and AI-assisted students will only accelerate, leaving genuine learning in the dust.

For further reading on the impact of algorithmic bias in assessment, see studies from institutions like the National Institute of Standards and Technology (NIST) or recent analyses from the Reuters Institute for the Study of Journalism on AI's effect on information processing.

Frequently Asked Questions

What is the primary danger of using AI for essay scoring?

The primary danger is the creation of a feedback loop where students only learn to write what the algorithm expects, thereby stifling creativity, nuance, and complex critical thought that falls outside predefined parameters.

How does IoT technology factor into automated essay scoring systems?

While the core scoring is deep learning, IoT integration often relates to secure testing environments, remote proctoring, or collecting metadata about the testing conditions, adding layers of monitoring to the assessment process.

Are these systems currently used in major university admissions?

While widespread adoption for high-stakes admissions is still emerging, similar automated scoring technologies are heavily used in standardized testing bodies and K-12 systems for initial screening and large-scale assessment.

What is 'prompt engineering' in the context of essay writing?

Prompt engineering, in this context, refers to the specific techniques students use to structure their essays—including keyword placement, sentence length variance, and argumentative scaffolding—designed explicitly to maximize a score from an existing AI grading model.

Related News

The Great Deception: Why 'Humanizing' Tech in Education Is a Trojan Horse for Data Mining

The push to keep education 'human' while integrating radical technology hides a darker truth about data control.

The AI Deepfake Lie: Why Tech Solutions Will Never Stop Sexualized Image Generation

The fight against AI-generated sexualized images is a technological dead end. Discover the hidden winners and why detection is a losing game.

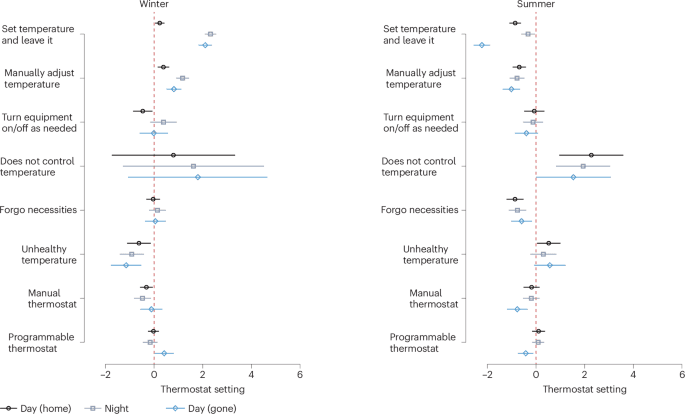

The Climate Lie: Why Your Smart Thermostat Is A Trojan Horse for Energy Control

Forget high-tech fixes. New data reveals US indoor temperature control is a behavioral battlefield, not a technological one. Who profits from this illusion?