Nvidia Didn't Buy Groq for Chips—They Bought the Future of Inference. The $20 Billion Truth They Aren't Telling You.

Forget the hardware war. Nvidia's alleged $20B move for Groq isn't about GPUs; it's a preemptive strike against the coming 'Inference Bottleneck.'

Key Takeaways

- •The $20B valuation reflects the strategic value of eliminating future inference competition.

- •Groq's LPU architecture solves the efficiency crisis of running large models post-training.

- •This acquisition signals the end of the GPU as the singular solution for all AI needs.

- •Nvidia is consolidating control over both AI training and deployment infrastructure.

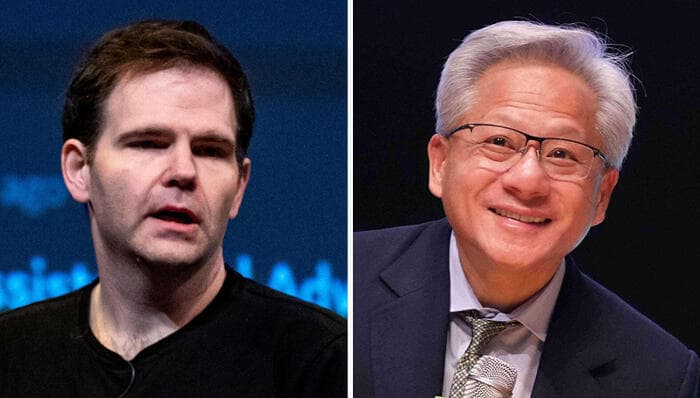

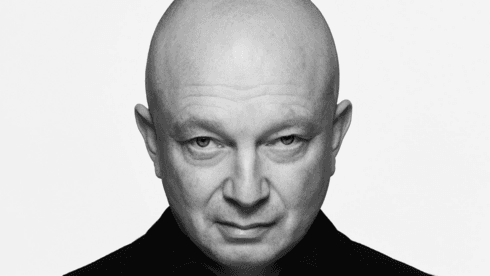

The Hook: Why a $20 Billion 'Talent Grab' is Actually a Declaration of War

The tech rumor mill is buzzing about Nvidia's supposed $20 billion play for Groq—a valuation that seems absurd for a startup whose primary claim to fame is speed in AI inference. But this isn't about acquiring a competing chip manufacturer. This is about securing the future of how AI models *run*, not just how they're trained. The unspoken truth behind this potential deal is that Jensen Huang sees the writing on the wall: the era of GPU dominance for every phase of AI is ending, and the next trillion-dollar fight will be won or lost in the deployment phase. This move is pure, ruthless strategic defense against the looming AI inference crisis.

The 'Meat': Talent and Technology Over Traditional Acquisition

Groq’s LPU (Language Processing Unit) architecture is revolutionary because it prioritizes sequential processing, which is exactly what large language models (LLMs) demand during inference—the phase where the model generates actual responses. While Nvidia's GPUs (like the H100 or upcoming Blackwell) remain unmatched for massive parallel training, they are notoriously inefficient and power-hungry when simply serving millions of user requests per second. This is the AI inference bottleneck.

If Nvidia acquires Groq, they aren't just absorbing top-tier talent; they are internalizing a completely different architectural philosophy. They neutralize a potential existential threat. Imagine if a competitor suddenly offered 10x the performance at 1/5th the operational cost for real-time AI applications. That’s what Groq represents. For Nvidia, $20 billion is the premium price for eliminating a future competitor and integrating their specialized solution directly into the CUDA ecosystem, effectively locking down the deployment side of the equation.

The Why It Matters: The Death of the One-Size-Fits-All Chip

For years, the narrative has been simple: Nvidia makes the best chips for everything AI. This deal shatters that narrative. It proves that the industry understands that training and inference require fundamentally different hardware solutions. This move signals a fragmentation of the silicon landscape. We are moving away from the monolithic GPU solution toward specialized accelerators for every step of the AI lifecycle.

The real loser here, besides potential acquirers like Microsoft or Google who might have been eyeing Groq, is the democratization of high-speed AI. By absorbing Groq, Nvidia tightens its grip. They control the training pipeline (GPUs) and now they are seizing control of the deployment pipeline (LPUs). This creates a nearly insurmountable moat around the entire generative AI infrastructure, forcing every startup and enterprise to remain tethered to the **Nvidia ecosystem**.

Where Do We Go From Here? A Prediction

Expect immediate, aggressive integration. Within 18 months, Nvidia will release an 'Inference Optimized' version of their software stack, heavily featuring Groq-derived scheduling and architecture principles, likely branded under a new 'Nvidia Serve' umbrella. My prediction is that we will see a massive shift in cloud provider investment away from general-purpose instances toward these highly specialized inference platforms. Furthermore, expect AMD and Intel to immediately accelerate their own specialized inference hardware projects, realizing that simply catching up on raw GPU compute power is no longer enough. The race is now about specialization and efficiency in serving live models.

Key Takeaways (TL;DR)

- Nvidia is buying Groq to dominate AI inference, not training compute.

- The deal neutralizes a massive future threat to their deployment monopoly.

- This confirms the industry shift toward specialized silicon for different AI tasks.

- The move further entrenches Nvidia’s control over the entire AI stack infrastructure.

Gallery

Frequently Asked Questions

What is the primary difference between Nvidia GPUs and Groq's LPUs?

Nvidia GPUs excel at parallel processing required for training massive AI models. Groq's LPUs (Language Processing Units) are specifically designed for sequential processing, making them vastly more efficient and faster for real-time AI inference—the process of generating responses.

Why is AI inference considered the next major bottleneck?

As more complex models are deployed globally, the cost and latency associated with running these models (inference) become the dominant operational expense and performance limiter, rather than the initial training cost.

Is this a traditional acquisition or a talent/technology buyout?

It is overwhelmingly viewed as a technology and talent acquisition. Nvidia is buying Groq's unique architectural approach and engineering team to integrate into their ecosystem, rather than buying manufacturing capacity or broad market share.

How does this affect competitors like AMD and Intel?

It forces competitors to accelerate their own specialized hardware development for inference. Simply matching Nvidia's training GPU performance is no longer sufficient; they must now compete on deployment efficiency.

Related News

The Hidden Cost of 'Fintech Strategy': Why Visionaries Like Setty Are Actually Building Digital Gatekeepers

The narrative around fintech strategy often ignores the consolidation of power. We analyze Raghavendra P. Setty's role in the evolving financial technology landscape.

Moltbook: The 'AI Social Network' Is A Data Trojan Horse, Not A Utopia

Forget the hype. Moltbook, the supposed 'social media network for AI,' is less about collaboration and more about centralized data harvesting. We analyze the hidden risks.

The EU’s Quantum Gambit: Why the SUPREME Superconducting Project is Actually a Declaration of War on US Tech Dominance

The EU just funded the SUPREME project for superconducting tech. But this isn't just R&D; it's a geopolitical power play in the race for quantum supremacy.

DailyWorld Editorial

AI-Assisted, Human-Reviewed

Reviewed By

DailyWorld Editorial